Elina Filatova, Data Scientist at NeuronUP, explains in this article how the Time-Aware LSTM (TA-LSTM) model allows highly accurate prediction of user outcomes even when their data is generated irregularly.

At NeuronUP we actively implement the most advanced machine learning methods that allow us to predict user behavior with high accuracy, identify trends, and forecast future outcomes based on their historical data.

However, traditional machine learning approaches struggle when data are generated irregularly and the intervals between observations are chaotic or user-specific. In such cases, conventional models become ineffective because they do not consider each person’s unique pace and frequency of activity.

Precisely to solve this problem we use a special version of the LSTM neural network, called Time-Aware LSTM (TA-LSTM). This model can effectively take into account the time intervals between events, enabling the prediction of time series even when the data are irregular.

Objective of the study and main challenges

At NeuronUP we have user metrics from previous days, with the goal of predicting their outcomes the next time they perform the activity. At first glance, this might seem like a simple task, but in real practice several important difficulties arise:

- Individual user pace: Each user has a unique training pattern; some play daily, others weekly, and some may take long breaks of up to a month, returning to activities unexpectedly. For example, Alex trains steadily every day, while José prefers weekly intervals. If their results are averaged without considering this frequency, crucial details will be lost.

- Variety of activities and their differential impact on users: Activities in NeuronUP are designed to improve different cognitive functions such as memory, attention, and logic, among others. Each activity has a particular difficulty level that varies by user. What is easy for one person may be a great challenge for another.

- Focus on specific activities: NeuronUP specialists determine which activities to assign to each user. For example, Carmen regularly performs memory, logic, and math exercises, while Pablo prefers attention exercises exclusively. Therefore, the predictive model must consider each player’s personalized path.

Of course, all these nuances complicate both the proper data preparation process and the training of machine learning models themselves. Ignoring players’ individual pace, their irregular intervals, and the different difficulty levels of activities inevitably leads to the loss of key information. As a consequence, there is a risk of obtaining less accurate predictions, which in turn can reduce the effectiveness of personalized recommendations in NeuronUP.

Subscribe

to our

Newsletter

Solution: Time-Aware LSTM

To effectively overcome all the difficulties mentioned above, the NeuronUP data team developed a specific solution based on the custom Time-Aware LSTM (TA-LSTM) model. This is an enhanced version of the standard LSTM neural network, which is capable of considering not only events in time series but also the time intervals between them.

Data preparation: why is the time interval so important?

Our model receives input data prepared in a specific way. Each record is a two-dimensional matrix that contains a player’s sequential results, chronologically ordered, as well as the time intervals in days between each time they performed the activity. To understand why considering the time interval is so important, let us meet two characters who will help illustrate this point clearly.

Imagine a marathon called “The Fastest and Most Agile,” for which two athletes are preparing:

- Neuronito – a disciplined and determined athlete. He never skips a training session, works on himself every day and meticulously takes care of his nutrition. Neuronito progresses constantly: with each new training session he becomes faster, more resilient and more confident. Given his stable training pace, we can easily predict that he will perform excellently in the marathon.

- Lentonito – a talented but less disciplined athlete. His training sessions are irregular. Today he trains enthusiastically, but tomorrow he prefers to rest enjoying his paella and a good ham. These inconsistent sessions generate fluctuations in his performance: sometimes he improves, other times not, but without stable growth. Lentonito is likely to reach the finish line with less impressive results.

In this way, we have seen with a simple example how much the time intervals between events influence the final results. It is precisely this information about stability and regularity in the “trainings” that we provide to our model.

If we do not consider the time intervals, the model would perceive these two athletes as identical, failing to notice the difference in their training approach. But TA-LSTM detects this key feature, analyzes the individual intervals between events, and makes more accurate predictions, taking into account each participant’s unique pace (or in our case at NeuronUP, the user’s).

But that’s not all! As you may have noticed, we also mentioned Neuronito’s and Lentonito’s nutrition. Not by coincidence, but because this data represents additional features that also significantly influence the final outcome.

Similarly, our model is able to consider not only time intervals but also other important features, such as age, gender, diagnosis, and even user preferences.

This considerably improves prediction accuracy, just like in our example with the protagonists, where we took into account their dietary regimen and its influence on success.

Technical details

Now that we have seen the main concept, let us move on to the technical aspect of how the Time-Aware LSTM (TA-LSTM) model works. This model is a modification of the standard LSTM cell, specifically designed to take into account the time intervals between sequential events.

The main objective of TA-LSTM is the adaptive update of the model’s internal memory state based on the time elapsed since the last observation. This approach is crucial when working with irregular time series, exactly the type of data we handle at NeuronUP.

The input vector at time t is represented as:

[text{inputs}_{t} = [x_{t},,Delta t]]Where:

- (x_t in mathbb{R}^d) – is the feature vector that describes the current event (each day the activity is performed).

- (Delta t in mathbb{R}) – is the time interval between the current and the previous observation.

The model’s previous states are represented in the standard way:

[h_{t-1},; C_{t-1}]Where:

- (h_{t-1}) – is the hidden state vector at the previous step.

- (C_{t-1}) – is the memory state (LSTM cell) at the previous step.

To account for the influence of the time interval t, the model employs a special memory decay mechanism, described by the following formula:

[gamma_t = e^{-text{RELU}(w_d cdot Delta t + b_d)}]Where:

- (w_d, ; b_d) – are trainable parameters of the model.

- (text{RELU}(x) = max(0, x)) – is the activation function that prevents negative values.

The decay coefficient (gamma_t) controls the update of the memory state.

The memory update is defined as:

[bar{C}_{t-1} = gamma_t cdot C_{t-1}]This means that when the time interval increases, the value of tends to zero, which causes greater “forgetting” of previous memory states.

In the next step, the corrected memory state (bar{C}_{t-1}) is fed into the standard LSTM equations:

[h_t, ; C_t = text{LSTM}(x_{t’}, h_{t-1}, bar{C}_{t-1})]The output values (h_t) and the updated memory state (C_t) are used in the next step of the model, ensuring accurate prediction and the ability to consider irregular time intervals between events.

Why use prediction?

Using prediction allows us to anticipate a patient’s future outcomes with high accuracy by previously analyzing their data through our custom TA-LSTM model. To verify prediction accuracy, we took a sample of real patient data and applied the model based on their previous activity records. Each patient’s last day of activity was excluded from the data to compare the real result with the model-generated prediction.

In most cases, the results predicted by our model closely matched the players’ actual values. However, we also identified some interesting exceptions where the prediction differed significantly from the real outcome.

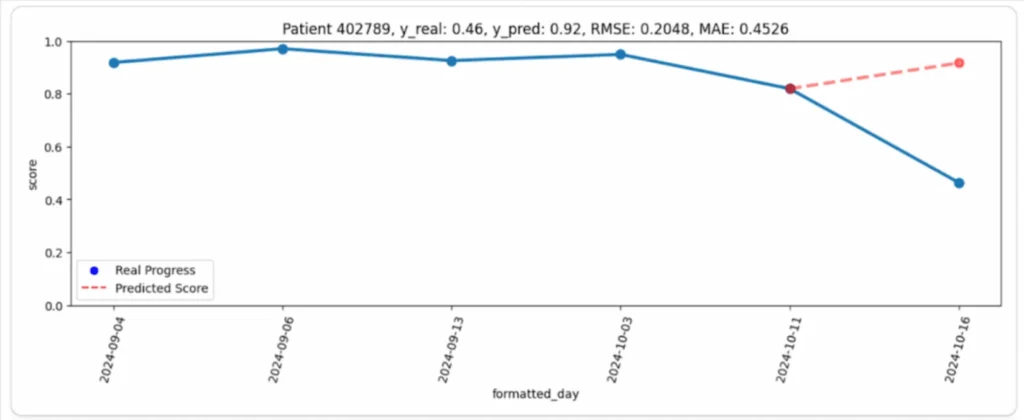

For example, in the following chart (Image 1), you can see the patient’s progress (blue line) and the model’s corresponding prediction (red line). At first glance, the difference seems quite large: the patient maintained a high and stable performance throughout the observation period, but their last result was unexpectedly much lower than predicted. In this case, the model’s prediction seemed much more logical than the real outcome.

Why did this happen?

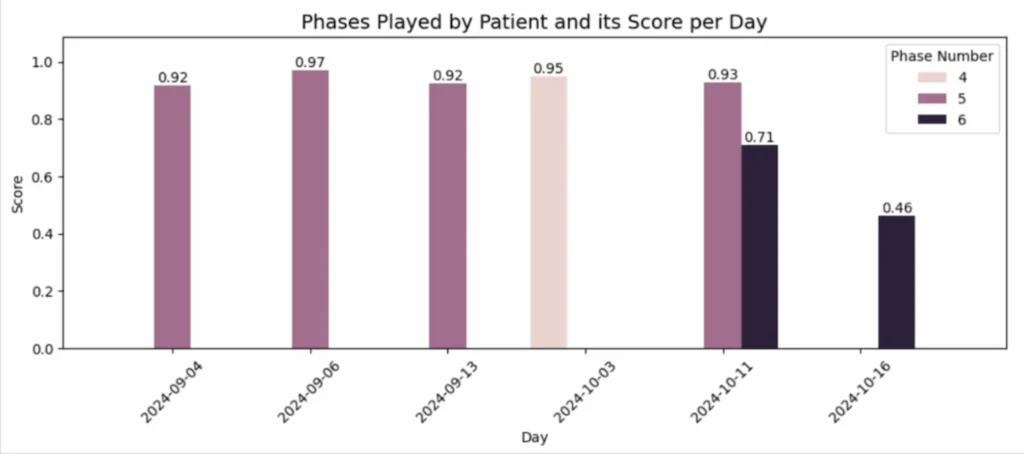

The reason turned out to be simple but important: on all previous days the patient played at easier levels (phases), obtaining consistently high scores. However, on the last day, they chose phase 6, which was more difficult, causing a notable drop in their performance (Image 2).

In this way, the model’s prediction made it possible to recognize an unexpected deviation from the patient’s usual behavior, identifying that it was caused by an increase in the difficulty level.

This approach provides NeuronUP professionals with a powerful tool to timely detect such situations and quickly analyze the causes of deviations.

Conclusion

The use of Time-Aware LSTM opens new possibilities for the accurate prediction of time series with irregular intervals. Unlike traditional models, TA-LSTM is capable of adapting to each player’s unique pace, taking into account their pauses and activity intervals. Thanks to this approach, our cognitive stimulation platform can not only accurately predict patients’ future outcomes but also timely detect possible anomalies or unexpected deviations.

At NeuronUP we value your time and are always looking to apply the most effective, innovative, and advanced technologies. Stay tuned to our updates — the best is yet to come!

Bibliography

- Lechner, Mathias, and Ramin Hasani. “Learning Long-Term Dependencies in Irregularly-Sampled Time Series.” arXiv preprint arXiv:2006.04418, vol. -, no. -, 2020, pp. 1-11.

- Michigan State University, et al. “Patient Subtyping via Time-Aware LSTM Networks.” Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’17), vol. -, no. -, 2017, pp. 65-74.

- Nguyen, An, et al. “Time Matters: Time-Aware LSTMs for Predictive Business Process Monitoring.” Lecture Notes in Business Information Processing, Process Mining Workshops, vol. 406, no. -, 2021, pp. 112–123.

- Schirmer, Mona, et al. “Modeling Irregular Time Series with Continuous Recurrent Units.” Proceedings of the 39th International Conference on Machine Learning (ICML 2022), vol. 162, no. -, 2022, pp. 19388-19405.

- “Time aware long short-term memory.” Wikipedia, https://en.wikipedia.org/wiki/Time_aware_long_short-term_memory. Accessed 12 March 2025.

If you enjoyed this article about the prediction of players’ results using TA-LSTM, you will likely be interested in these NeuronUP articles:

“This article has been translated. Link to the original article in Spanish:”

Predicción de resultados de jugadores mediante TA-LSTM.

Digitalization of Neuropsychological Assessment

Digitalization of Neuropsychological Assessment

Leave a Reply