In this article, PhD candidate Marta Arbizu Gómez presents the study «Large language models deconstruct the clinical intuition behind diagnosing autism», which explores the impact of using large-scale language models for the diagnosis of autism.

Introduction

The diagnosis of autism spectrum disorder (ASD) has traditionally been a complex task, relying heavily on clinical experience, detailed observation and the interpretation of diverse behaviors. Although there are well-defined diagnostic guidelines such as the DSM-5, clinical practice is often guided by an “intuition” that professionals develop after years of experience. But what if we could “read” that intuition and understand it from a more objective approach?

A recent study published in the journal Cell, titled “Large language models deconstruct the clinical intuition behind diagnosing autism”, explores precisely this possibility: using large language models (LLMs) to unravel the patterns clinicians follow when diagnosing autism. The findings are not only surprising, but could also have profound implications for how we understand and carry out ASD diagnoses today.

The context: why is it necessary to reassess the way we diagnose autism?

ASD is a neurodevelopmental disorder characterized by challenges in social communication and restricted, repetitive patterns of behavior and interests. However, these characteristics can present with great variability between individuals, which makes diagnosis a nuanced and sometimes subjective process.

Moreover, while standardized diagnostic tools such as the ADOS or ADI-R provide structure to the process, many diagnoses are based on narrative reports written by clinicians who have observed the patient. In other words, the way the clinician describes the patient can carry great weight in the final diagnosis.

Faced with this reality, the researchers posed a key question: which elements within these written reports are actually guiding diagnostic decisions?

Subscribe

to our

Newsletter

What did the researchers do?

The study authors collected more than 40,000 clinical reports of pediatric patients from the Massachusetts public health system. These reports, written by mental health professionals, contained detailed descriptions of patients’ behavior and functioning.

With this database, the researchers trained several language models, including GPT-4 (developed by OpenAI) and an open-source clinical model called Clinician-LLaMA. The idea was for the models to learn to predict whether a clinical report corresponded to a patient with an ASD diagnosis or not, based only on the text.

The results were surprising: the models achieved a remarkable accuracy in classification, even when key information such as the patient’s sex or age was hidden. This suggested that the reports contained implicit language patterns that the models could detect and that reflected how clinicians make decisions.

What did they find?

Beyond prediction accuracy, the most interesting aspect was what the models revealed about the diagnostic process itself. By analyzing which text fragments carried the most weight in the models’ decisions, the researchers identified that certain types of behaviors and descriptions were more determinative than others.

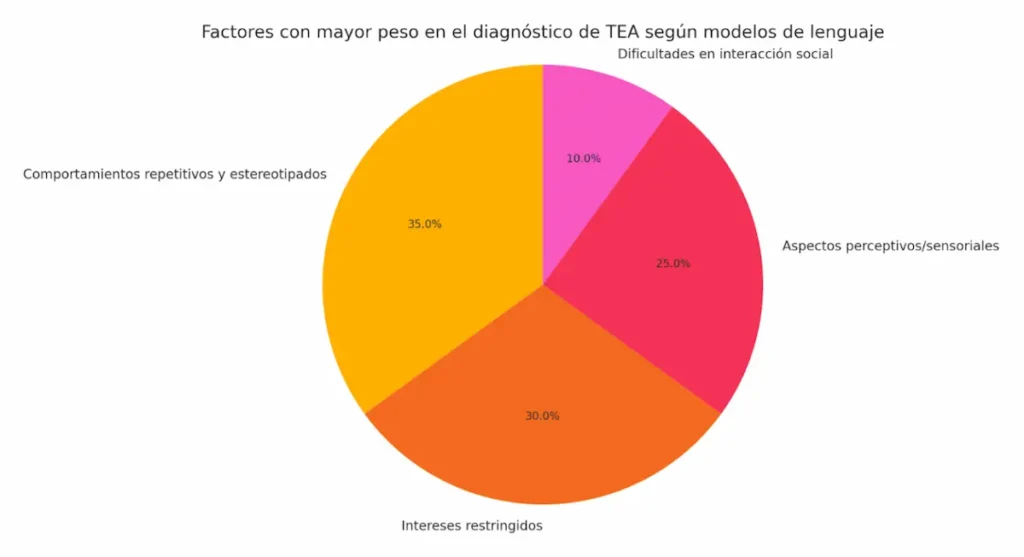

As we can see in the chart, repetitive, stereotyped behaviors, restricted interests and aspects related to sensory perception were the factors most associated with a positive ASD diagnosis. In contrast, difficulties in social interaction, which are one of the traditional pillars of diagnosis according to the DSM-5, turned out to carry less weight in the models.

This does not mean that social difficulties are not relevant, but rather that, in practice, clinicians seem to pay more attention —perhaps unconsciously— to other behavioral patterns when deciding whether a patient meets diagnostic criteria.

Below, the main results of the study are summarized in a table to facilitate understanding:

| Analyzed aspect | Result / Observation |

|---|---|

| Model used | GPT-4 and Clinician-LLaMA (language models trained on clinical reports). |

| Data source | Over 40,000 pediatric clinical reports from the Massachusetts public health system. |

| Model task | Predict whether the patient had an autism diagnosis based solely on the report text. |

| Model accuracy | High, even when variables such as sex or age were hidden. |

| Most determining factors in diagnosis | Repetitive behaviors, restricted interests and sensory/perceptual traits. |

| Less determining factors | Difficulties in social interaction. |

| Key implication | In clinical practice, observable behaviors influence more than expected. |

| Potential impact on diagnostic criteria | Suggests the need to reevaluate the weight of certain criteria in the DSM-5. |

| Application of AI in mental health | As a tool for diagnostic support and analysis of clinical reasoning. |

As can be seen, the language models not only managed to predict an ASD diagnosis with high accuracy, but they also revealed that certain behavioral patterns —particularly repetitive behaviors and restricted interests— are more influential in clinical practice than traditional diagnostic criteria might suggest. This opens the door to reflection on how these criteria are applied in real-world contexts.

Implications: should we rethink the diagnostic criteria for autism?

These findings open an important discussion: do current diagnostic criteria really reflect the way professionals evaluate patients?

If clinicians systematically give more importance to observable behaviors such as stereotypies or restricted interests, it may be necessary to reevaluate the weight given to each diagnostic category in official guidelines.

In addition, this approach could have implications for the training of new professionals, who could benefit from understanding how criteria are applied in real practice, beyond theory.

Can artificial intelligence help in the clinical diagnosis of ASD?

One of the great promises of artificial intelligence in the health field is its ability to detect complex patterns in large volumes of data. In this case, language models not only act as classification tools, but also as instruments that allow us to make the invisible visible: the implicit logic behind clinical decisions.

Far from replacing professionals, these models can act as allies, offering a second opinion based on thousands of previous cases, and helping to detect biases or inconsistencies in diagnostic processes.

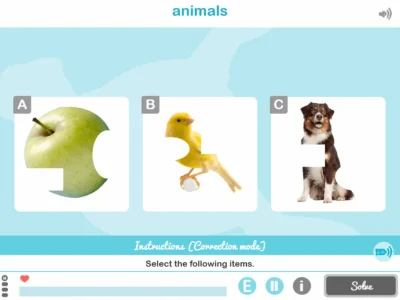

Where could NeuronUP contribute in studies like this?

NeuronUP could contribute significantly to studies like this by facilitating replication in more diverse and non–English-speaking populations, thanks to its international presence. Its platform, with hundreds of cognitive activities, would allow complementing the analysis of clinical reports with structured data on cognitive performance. In addition, this approach could be applied to other clinical conditions such as ADHD or mild cognitive impairment, improving early detection and diagnostic accuracy.

Conclusion of the study

This study marks a milestone at the intersection of artificial intelligence and mental health. By using language models to analyze clinical reports, the researchers have not only demonstrated that autism diagnosis can be predicted with remarkable accuracy, but also revealed how the “clinical intuition” that guides these decisions is constructed.

In the near future, tools like these could be integrated into health systems to provide diagnostic support, improve professional training, and perhaps even redefine the criteria with which we understand autism. What is clear is that artificial intelligence is not only transforming technology, but also our way of understanding the human mind.

References

- Feng S, Sondhi R, Tu X, Buckley J, Sands A, Comiter A, Zhang H, Gao R, Sragovich S, Mello JD, Fedorenko E, Saxe R, Sontheimer EJ, Sapiro G, O’Reilly UM, McCoy TH, Beam AL. Large language models deconstruct the clinical intuition behind diagnosing autism. Cell. 2024 Mar 21. doi: 10.1016/j.cell.2024.03.004.

If you enjoyed this blog post about how ChatGPT-style language models can help in the diagnosis of autism, you will surely be interested in these NeuronUP articles:

“This article has been translated. Link to the original article in Spanish:”

¿Pueden los modelos de lenguaje tipo ChatGPT ayudar a diagnosticar el autismo?

Neurorehabilitation and stimulation of executive functions in the workplace

Neurorehabilitation and stimulation of executive functions in the workplace

Leave a Reply